Download

Abstract

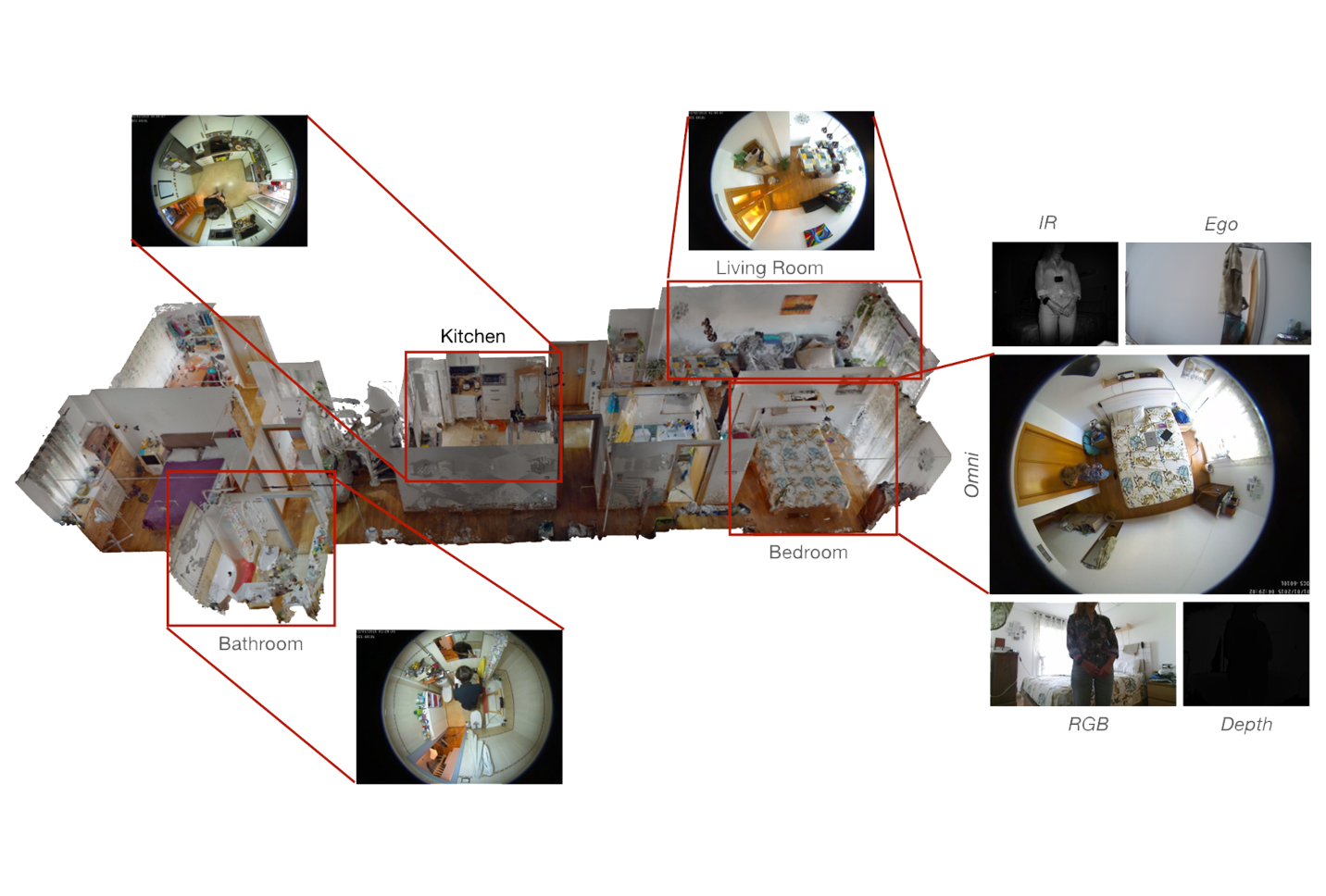

We introduce ODIN (the OmniDirectional INdoor dataset), the first large-scale multi-modal dataset aimed at spurring research using top-view omnidirectional cameras in challenges related to human behaviour understanding. Recorded in real-life indoor environments with varying levels of occlusion, the dataset contains images of participants performing various activities of daily living. Along with omnidirectional images, additional synchronized modalities of data are provided. These include (1) RGB, infrared, and depth images from multiple RGB-D cameras, (2) egocentric videos, (3) physiological signals and accelerometer readings from a smart bracelet, and (4) 3D scans of the recording environments. To the best of our knowledge, ODIN is also the first dataset to provide camera-frame 3D human pose estimates for omnidirectional images, which are obtained using our novel pipeline. The project is open sourced and available at https://odin-dataset.github.io .

Figure 3: Overview of ODIN, a large-scale omnidirectional dataset for Human Behaviour Understanding – Each sequence is composed of the 3D scan of the recording location and omnidirectional RGB images as well as 5 extra modalities: (1) depth, (2) IR, (3) RGB images from side views, (4) RGB egocentric images and (5) biometric signals from a wearable device. Four of the five environments — kitchen, living room, bathroom, and bedroom — are represented in the figure (the activity room can be seen in Fig. 4).

Citation

Ravi, Siddharth et al. “ODIN: An OmniDirectional INdoor Dataset Capturing Activities of Daily Living From Multiple Synchronized Modalities” 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) (2023): 1-8.